Retrieving large public datasets from academic repositories is a critical task for researchers in data-intensive fields such as machine learning, bioinformatics, and computational linguistics. However, downloading terabytes of data without breaching rate limits or connection timeouts can be a serious challenge, especially when dealing with repositories like Kaggle, Zenodo, Hugging Face, or OpenNeuro. That’s where open-source download managers step in as valuable tools, helping researchers increase reliability, resume interrupted downloads, and parallelize transfers efficiently.

TLDR:

Researchers often rely on specialized open-source download managers to fetch large datasets from academic sources without being blocked or throttled. These tools boost download speeds with multi-threading, allow resume support, and help circumvent rate limits using smart retry mechanics. Tools like aria2 and wget shine for their scriptability, while GUI-led options like Persepolis offer user-friendly alternatives. This article covers six top open-source download managers perfect for research-grade data collection tasks.

1. aria2: The Versatile Powerhouse

aria2 is a lightweight command-line download utility that supports HTTP, HTTPS, FTP, SFTP, BitTorrent, and Metalink. Its ability to split files into segments and download them concurrently makes it a favorite for pulling down massive datasets from multiple mirrors.

- Multi-source download: aria2 can fetch pieces of a file from multiple sources simultaneously.

- Parallel connections: Boosts performance by multi-threading downloads.

- Resume support: Interrupted transfers can be resumed with a simple command.

Researchers especially appreciate its straightforward scripting capabilities, allowing batch download via simple shell commands or integration into Python workflows.

2. wget: The Time-Tested Standard

wget is one of the oldest and most reliable tools in the Unix-based open-source ecosystem. What it lacks in a user interface, it compensates for with rock-solid reliability and deep customization.

Some standout wget features include:

- Recursive downloading: Ideal for crawling entire directories or mirror sites.

- User agent spoofing: Helps bypass simplistic rate limits on academic repositories.

- Retry and exponential backoff: Built-in logic helps prevent being blocked for repeated accesses.

Its mature lifecycle and extensive documentation make it a dependable tool for educational institutions and research labs that demand reproducible data-fetch pipelines.

3. Persepolis: GUI Front-end for aria2

Persepolis Download Manager is an open-source GUI that serves as a front-end for aria2, designed for users who prefer a point-and-click interface over command-line tools. Despite offering a graphical shell, it retains all the powerful features of aria2 under the hood.

Reasons researchers choose Persepolis:

- Visual management: Manage and schedule downloads using a clean and intuitive interface.

- Segmented downloading: Like aria2, it downloads files in multiple parts to maximize speed.

- Cross-platform: Runs on Windows, Linux, and MacOS.

This tool is particularly useful in collaborative research environments where community members may not be comfortable writing shell scripts, but need access to robust download capabilities.

4. rclone: Cloud-to-Local Dataset Retrieval

rclone excels in syncing files between cloud services and local storage. With support for over 40 cloud providers—including Google Drive, Dropbox, and Amazon S3—rclone is a favorite for researchers who store intermediate results or datasets in the cloud.

Key features:

- Remote mounting: Access cloud drives as if they were local storage for seamless dataset retrieval.

- Checksum verification: Ensures data integrity for scientific reproducibility.

- Bandwidth throttling and retry logic: Prevents high-volume transfers from being throttled by cloud providers.

For research teams that work across multiple locations or need to sync shared cloud-based storage to HPC resources, rclone provides an invaluable link.

5. axel: Simple and Fast Parallel Downloader

axel is a minimalist command-line download accelerator that focuses on simplicity and performance. Unlike wget or curl, it focuses exclusively on HTTP/FTP and parallelizes downloads without the complexity of a full-featured download manager.

Why researchers like axel:

- Parallel downloads: Splits files into segments for faster transfer.

- Lightweight: Very low dependency footprint makes it ideal for cluster nodes and remote servers.

- Resume support: Sessions can be resumed using the cache files.

axel is often used on headless or high-performance computing environments where bandwidth maximization is essential, but complex logic isn’t necessary.

6. motrix: Modern Graphical Interface with Multithreaded Power

Motrix offers a modern GUI built on electron and leverages aria2 in the backend for high-performance downloads. While it’s more common in consumer-grade scenarios, researchers dealing with large media assets or files with torrent/magnet links often incorporate Motrix into their workflow.

Features attracting academic users:

- Up to 64 threads per download: Reduces total time for very large datasets.

- Built-in torrent support: Useful for P2P dataset distribution formats.

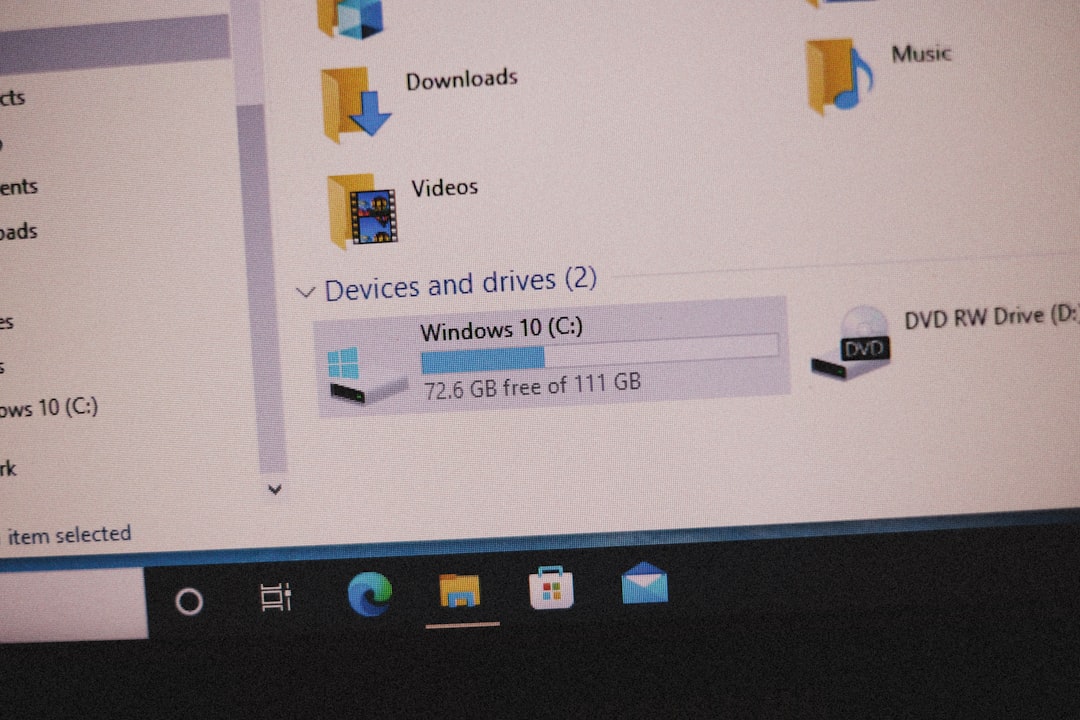

- Disk space analysis: Helps manage space for multi-terabyte downloads.

Motrix combines aesthetics with utility, making it suitable for both advanced users and those new to intensive data gathering.

Tips for Avoiding Rate Limits

- Use randomized wait times between requests to avoid triggering rate limit detection scripts.

- Authenticate when possible: Use API keys or OAuth tokens offered by academic repositories to prevent anonymous download caps.

- Use IP rotation: Through VPNs or proxies — especially helpful when pulling from distributed mirror networks.

- Check for rsync or direct FTP access: Repositories often allow alternate protocols more suitable for large-data transfers.

Conclusion

From simple tools like wget to full-featured engines like Motrix and rclone, researchers are spoiled for choice when it comes to open-source download managers. The needs of the project—whether it’s downloading terabytes of MRI data, syncing deep learning models from the cloud, or mirroring public FTP sites—determine which tool is most appropriate. Integrating these tools into scripting environments and research pipelines not only saves time but also increases the reproducibility and reliability of data-gathering tasks.

Frequently Asked Questions (FAQ)

<

dl>